I’ve avoided writing this particular column for months, in part because generative AI is such a hot button topic at the moment, and partly because I needed to work through my own thoughts and feelings on the matter. And maybe because I suspected it would be one long-ass article.

I’ve been feeling this weird sense of jamais vu, that is, increasingly feeling that what should be familiar no longer is. We’re moving rapidly into a “sci-fi” world, and the things that used to excite me in the abstract – like self-driving cars and missions to Mars – no longer do. Mainly because they are being shepherded in by huge corporations who are only motivated by profit, and are vastly under-regulated as they pursue new technology that’s rumbling like a mega-quake throughout our society. Some companies are even removing safety mechanisms which had helped make their products more benign.

But Generative AI represents a whole new level of tech insanity.

Text AI – Telling Little Lies

To be clear, what we have today is not true Artificial Intelligence. The Generative AI systems for text are called Large Language Models, and they are basically learning apps that are trained on large amounts of “content” – what the tech companies call the photos and text we users upload to the internet. As each of these apps consumes more content, it finds patterns which it can emulate. What word is likely to come next? How many fingers does a hand usually have? How have humans responded to other humans when asked a similar question?

Over time, they get better and better at emulating human output, at least on a general level. This works best on non-fiction. Ask an AI chatbot for a snippet of coding and it can write it for you quickly. Ask it to describe a home for sale (particularly handy if you are a real estate agent) and it can shave minutes off of this repetitive task, which over days and weeks can really add up.

But here’s the thing. These apps are trained to give you an answer, and if they don’t have one, they often just make things up. There are many examples of this floating around the web. Ask for a restaurant recommendation, and you may be recommended a restaurant that doesn’t even exist.

One author on Mastodon asked for the plot of one of his novels, and it mixed content from his book and another listed on the same page, and for good measure threw in a fake plot point made from whole cloth.

But these relatively harmless mistakes and fabrications (in the tech industry, they call them “hallucinations,” but that’s a whole ‘nuther thing) are not the end of it. Here are a couple more damaging falsehoods, reported on the Arstechnica site:

It’s a big problem when an AI bot generates false information that can potentially mislead, misinform, or defame. Recently, The Washington Post reported on a law professor who discovered that ChatGPT had placed him on a list of legal scholars who had sexually harassed someone. But it never happened—ChatGPT made it up. The same day, Ars reported on an Australian mayor who allegedly found that ChatGPT claimed he had been convicted of bribery and sentenced to prison, a complete fabrication.

A second issue with these apps is that they are trained on today’s internet, which is full of great things – human knowledge, amazing art, and truly inspiring stories. But the internet is also full of bigotry, misogyny, queer phobia, racism, ableism, and all of their dark cousins, and so those things necessarily become part of the patterns Generative AI learns. Gizmodo dug into this last month:

“Depending on the persona assigned to ChatGPT, its toxicity can increase up to [six times], with outputs engaging in incorrect stereotypes, harmful dialogue, and hurtful opinions. This may be potentially defamatory to the persona and harmful to an unsuspecting user,” the researchers said in the study. Worse, the study concludes that racist biases against particular groups of people are baked into ChatGPT itself. Regardless of which persona the researchers assigned, ChatGPT targeted some specific races and groups three times more than others. These patterns “reflect inherent discriminatory biases in the model,” the researchers said.

At its best, text Generative AI can be a time saver in certain cases. At its worst, it can destroy reputations.

Image AI – You’ve Seen This before

When MidJourney first hit the news, I was super excited about it. I jumped in to give it a try. The ability to create amazing art was addictive, the surprises generated by each new prompt and iteration glittering, colorful candy for your eyes. No longer would hours-long searches be required to find the perfect art. In a matter of minutes, you could generate it yourself, with a little help from your AI friends.

And the whole thing about starting each prompt with the word “imagine” was genius. Who doesn’t want to imagine something great?

Sure, there were little weirdnesses. Eyes were usually a little strange and undefined. Hands often had more than five fingers, and sometimes extra appendages appeared randomly. Remember, AI doesn’t really “know” what a human should look like. It’s merely extrapolating from millions of examples.

I also noticed this off thing with generated images of gay and lesbian couples. AI seems to think “gay” or “lesbian” equals “twins” and often generates two very similar looking people. And getting it to generate anything but white folk was difficult at best, which again goes back to the racism built into our culture and online platforms.

Over the ensuing weeks and months, I learned more about how these apps worked. You can actually tell MidJourney to create art “in the stye of,” and it will spit out what you want using an artist’s signature style.

But here’s the thing. That artist never gave permission to MidJourney to license their style, and they have never received a penny for the use of their skill.

So what, you say? Just avoid asking it for art in the style of someone? It doesn’t matter. All of the art MidJourney creates was sourced from someone’s art, and even if you can’t trace it back to exactly whose, it’s still defacto theft.

And AI art can’t be copyrighted, meaning that if you use it on your cover, there’s no way to protect yourself from a lawsuit from the artist who pioneered the original style. Even if this is extremely unlikely to happen to you, it almost certainly will happen to someone as artists begin to fight back.

I recently created a cover for my re-release book “The Autumn Lands.” Finding the source art without using anything AI took twice as long as it used to, as my go-to stock art site is now polluted with tons and tons of AI generated art. It’s beautiful – remember the whole candy thing up above? But there’s a depressing sameness to it. Many of these “artists” have uploaded tens of thousands of images to the web, and often they are the same image over and over and over with only slight variations.

A recent flap over at Tor illustrated some of the pitfalls of this whole mess, as an artist there either didn’t know a source file they bought was AI, or knew and concealed that fact from the publisher.

And the stock photo companies have been slow to respond. Some are insisting there is no AI content on their platforms and then slow walking it when you report it. Others are openly embracing these new “content creators.”

At the very least, we need reliable tools and labeling to let us avoid AI generated files when looking for art for our projects. In the stock art space, knowing when art was uploaded would be a short-term fix (there are ways to find out, but it’s not easy) and the ability to filter out particular artists from my search would be a huge step forward (and could be used to crowdsource likely culprits for the website to ban).

My initial plan was to only use AI art in things I would never use paid art for – mostly social media post imagery. But even that is problematic, for the reasons explained above, so I am no longer using AI generated art in any capacity.

Maybe someday that will change, but for now, I’ve come to believe that any use of AI generated images is detrimental to the artists I love and to society in general.

Impact on Authors – Tell Me (My) Story

And what about AI storytelling?

A couple months ago, I asked ChatGPT to write a short fantasy story in my style. It spit out a trite fantasy tale that was full of choppy sentences, simple present tense descriptions, and cliches (I mean, seriously, “trusty steed”?). But it was set in the city of El Dorado, and I lived in El Dorado Hills until 2015. So it clearly had glommed onto something about me.

For now, generative AI’s fiction writing abilities make it unlikely that it will win a Hugo or Pulitzer Prize any time soon. But it doesn’t have to be good to cause damage.

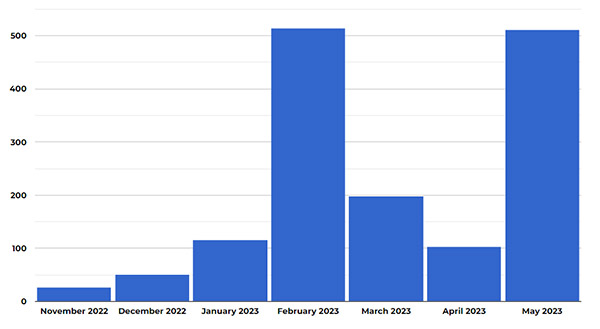

Neil Clarke over at Clarksworld has been documenting his magazine’s issues with AI for a few months now. By and large, the issue doesn’t seem to lie with legitimate authors. Instead, scammers hoping to make a few bucks have been breaking down the gates and stuffing Clarkesworld’s submission box with AI generated crap. To be clear, these are not stories that would ever be published, even if they were written by humans. But the sheer volume of AI generated submissions received is staggering. The chart below shows the quick rise of AI fiction. The pause in March and April was due to the first wave of mitigation procedures Clarkesworld put into place, including a stark warning that anyone found to be submitting AI-written work would be banned for life:

And that 500 stories in May was as of May 18th. Let’s do a little math here. Let’s say each short story averages twenty pages. That’s 10,000 pages of content, or 17,222 pages by month’s end. If the average novel is 250 pages long, that means that the poor readers at Clarkesworld are having to wade through sixty-nine novels worth of AI dreck this month.

Neil has been at the forefront of this, and has come up with a number of ways to identify this content (which he doesn’t share because he doesn’t want to tell the spammers how to get around the new barriers). But how much time is now being devoted to this effort, and how can that not harm their efforts to find and share work from legitimate writers (like me)?

And as AI gets better, screening it out will only get harder.

One other knock-on effect – there are now a number of tools that claim to be able to detect Generative AI writing, but their accuracy rates are not great. Authors who are falsely flagged might see their work banned at certain venues, even if they’ve never touched AI.

And what if you’re an author using Grammarly or Pro Writing Aid? As these tools integrate generative AI into their own apps, the suggestions they make might inadvertently cause your own work to sound like AI generated text. James Nettles of ConTinual looks at this issue in depth in this great video.

Don’t discount human error as a factor in all of this. A Texas professor recently blocked the graduation of half of his students after asking ChatGPT if it wrote their papers. It lied (or hallucinated LOL) and said yes, but anyone with even a passing understanding of these things knows it doesn’t work like that. Remember, this is not true AI. Yet.

Websites – The Next Frontier

The last thing that really gives me chills about this current iteration of “AI” is the effect it may have on websites and the web in general. Bing and Google are testing out AI models that would serve you up the information you’re looking for in a quick and easy format, which sounds great, right? But consider:

The information has to come from somewhere. Mark and I have spent decades building various LGBTQ directories, which Google has steadily undermined with their algorithm. Now they seem to be planning to just steal the info to present to their visitors, without a) ever sending those folks to our site, b) compensating us, or c) even telling the end user where it came from.

Add in Generative AI’s tendency to just make shit up, and you have a recipe for disaster.

And in a yer or two, when all the websites that provided the information have been run out of business, where will they get their information?

My guess is that you will pay them for it, and Google will essentially become the web, a big tech information landlord. And maybe that’s what they want.

I could go on and on about this (and apparently I have). There are other fronts, including Apple’s new AI narration tool that neatly cuts paid narrators out of the loop. If this were intended to help those with hearing issues enjoy content that’s not available in an audio format, I would have a lot less problem with it.

It Needs to be Regulated

We’re at a point where “move fast and break things” has become one of the three great threats to our ongoing survival. Break too many things all at once, and everything collapses. Companies have become predatory experts at ensnaring us in their sites and then sucking us dry – this article on the enshitification of TikTok is a must read to understand how this business model works.

But this Generative AI thing goes beyond that into outright theft. It’s a theft of content from the artists, writers and other “content creators.” It’s a theft of time from everyone who now has to deal with the boiling cauldrons of shit these apps are generating. And it’s a theft of livelihoods from anyone whose business may soon be made obsolete.

With proper regulation, Generative AI could do a world of good, simplifying repetitive tasks and acting as an assistant to writers and artists and the like instead of their replacement. But those of us who provide the content in whatever form need to be credited and compensated, or the whole thing will come crashing down.

I wish I had more faith in our governments to do something about this. The EU is taking initial steps, and maybe the US will follow. After all, this is a threat to everyone, not one political party over another, and tech issues are one thing that have occasionally brought everyone together.

So I am trying to be positive, to put the word out about what’s happening, and to have faith in our ability to do what’s right and to mend what these companies have so cavalierly broken.

Always, hope.

This column was 100% written by a tired writer human.